ImaginateAR

Description

️ 🖼Name of the tool:

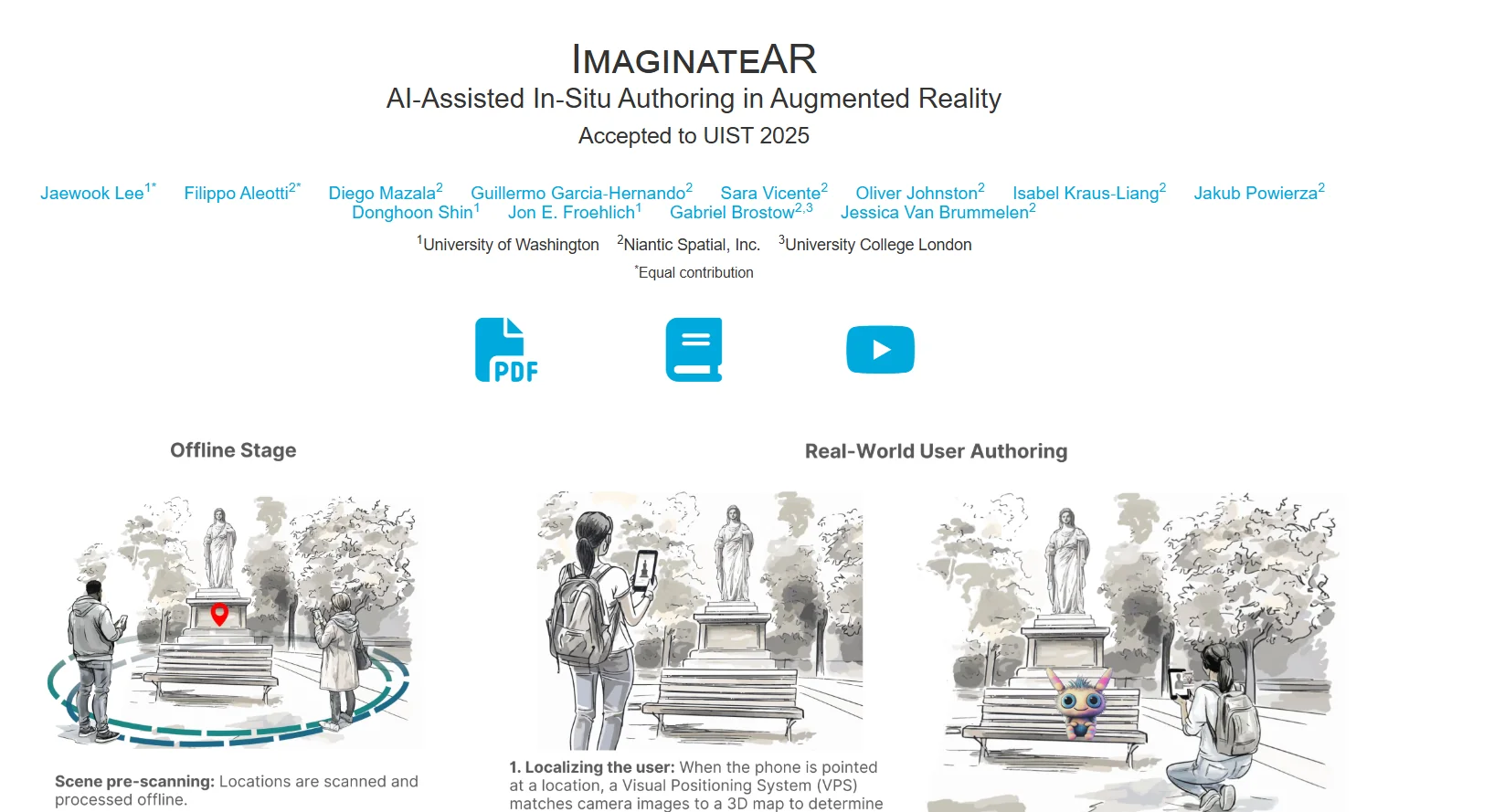

ImaginateAR

🔖 Tool Category:

AR Authoring Tool / Multimodal AI Tool

️ ✏What does this tool offer?

It enables the user to author augmented reality (AR) scenes in the real environment via voice and text interaction, combining artificial intelligence (AI) with real-world scene understanding.

It uses 3D scene understanding to detect physical elements in the physical environment and transform them into an internal data structure that can be interacted with.

When given a description such as "a dragon enjoying a campfire," it generates appropriate 3D assets and contextualizes them according to the surrounding environment.

It allows the user to manually modify these assets after they are generated, in terms of position, size, or shape.

Supports switching between authoring modes:

- AI-assisted mode

- AI-decided mode

- Full manual interaction.Fast 3D generation of 3D meshes/shapes from textual descriptions, with improvements in time performance compared to previous methods.

Uses a combination of techniques such as OpenMask3D, semantic mapping via VLMs, clustering, and mesh lifting.

⭐ What do you actually present based on the information and sources?

The research was accepted at the UIST 2025 conference as a research project.

The tool was empirically evaluated in a field study with 20 participants in outdoor environments. The results showed that users favored a hybrid approach: Using intelligence to accelerate creativity, while allowing manual adjustment when needed. In technical evaluation, scene understanding pipes outperformed basic models such as OpenMask3D in terms of representation accuracy and minimizing the number of unnecessary redundant boxes.

Generation of 3D assets is fast (without taking a long time) versus some of the slower traditional methods. l

🤖 Does it include automation?

Yes - automation is an essential part of the design:

The integration between AI and the scene system allows to automatically generate assets based on the user's description without initial manual intervention.

The system can automatically suggest asset positions based on its understanding of the surrounding space.

The user can modify the generation dimension, combining automation with manual control.

💰 Pricing model:

There are no details about pricing or subscription plans.

🆓 F ree plan (or open access) details:

The project appears to be a research project (Academic/Prototype) and not a full commercial service available for public use.

The site showcases research and explains the tools, not a commercial interface or an invitation to subscribe immediately.

💳 Paid plan details:

There is no stated information about a paid plan or official commercial use on the site yet.

🧭 Method of access:

Via Niantic Spatial's ImaginateAR official website (the web interface that showcases the project).

The project is linked to a research paper and public documentation that can be found on the site.

There appears to be no public UI or app available for trial from the general public at the moment - it is a preliminary research tool.

🔗 Link to the experiment/source:

ImaginateAR on the Niantic Spatial website